Three major tech companies – Amazon, Microsoft, and IBM – have recently begun backing away from aspects of their facial recognition technology businesses amid heightened racial bias concerns in the United States.

Nationwide protests following the killing of George Floyd have put the impact of racial bias in policing at center stage. In recent days, the three tech giants have each made announcements about pulling back on their work in the facial recognition space. IBM cited biases specifically, Microsoft said it wants to protect human rights, and Amazon cited no specific rationale behind its decision.

On June 10, Amazon announced a one-year moratorium on police use of Amazon’s facial recognition technology, Amazon Rekognition. In a blog post, Amazon said it will still allow organizations such as Thorn, the International Center for Missing and Exploited Children, and Marinus Analytics to use its technologies for their efforts to rescue human trafficking victims and reunite missing children with their families.

“We’ve advocated that governments should put in place stronger regulations to govern the ethical use of facial recognition technology, and in recent days, Congress appears ready to take on this challenge,” Amazon said. “We hope this one-year moratorium might give Congress enough time to implement appropriate rules, and we stand ready to help if requested.”

Going one step further, Microsoft President Brad Smith said the company will not sell facial recognition technology to police departments until there is a Federal law regulating the technology. At a Washington Post event June 10, Smith said the company has not sold its facial-recognition technology to police departments.

“We will not sell facial-recognition technology to police departments in the United States until we have a national law in place, grounded in human rights that will govern this technology,” Smith said. “The bottom line for us is to protect the human rights of people as this technology is deployed.”

In a June 8 letter to Congress addressing “racial justice reform,” IBM CEO Arvind Krishna said the company has “has sunset its general purpose facial recognition and analysis software products.”

“IBM firmly opposes and will not condone uses of any technology, including facial recognition technology offered by other vendors, for mass surveillance, racial profiling, violations of basic human rights and freedoms, or any purpose which is not consistent with our values and Principles of Trust and Transparency,” Krishna wrote. “We believe now is the time to begin a national dialogue on whether and how facial recognition technology should be employed by domestic law enforcement agencies.”

The private sector push for a Federal law regulating facial recognition technologies the introduction by Sens. Jeff Merkley, D-Ore., and Cory Booker, D-N.J., of the Ethical Use of Facial Recognition Act on Feb. 12. The legislation would institute a moratorium on all Federal use of facial recognition technology until Congress passes legislation outlining “specific uses for the data” collected.

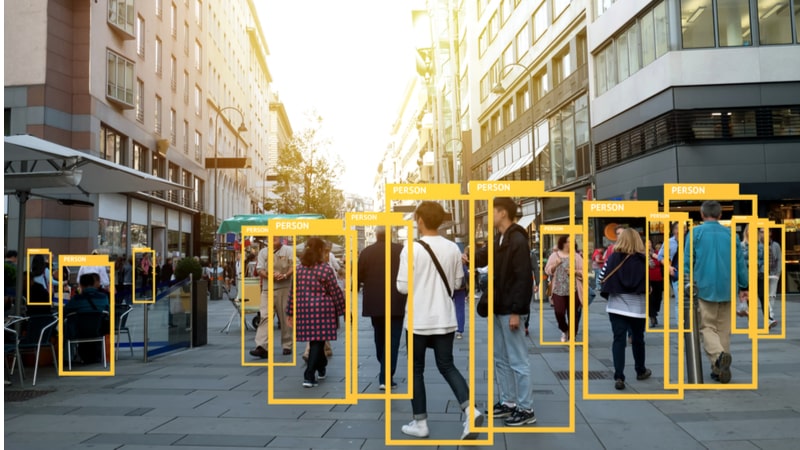

While the objections to facial recognition technologies have been reignited in recent weeks, the campaign to halt their use in policing is longstanding. For years, civil rights organizations have warned that “face recognition systems are powerful – but they can also be biased.”

More recently, states have also made moves to limit the use of facial recognition software by police departments. In October of 2019, California signed into law the Body Camera Accountability Act, which prohibits “a law enforcement agency or law enforcement officer from installing, activating, or using any biometric surveillance system in connection with an officer camera or data collected by an officer camera.” The legislation says that “facial recognition and other biometric surveillance technology pose unique and significant threats to the civil rights and civil liberties of residents and visitors,” and that such technologies have misidentified women, young people, and people of color.