Editor’s Note: The following story is taken from a book-length work authored by a senior Federal IT official currently working in government. This is one part of an extensive, firsthand account of how IT decisions are made, the obstacles standing in the way of real change in government technology management, and what one career Federal IT employee really thinks about the way government does IT.

Because the author is a current government employee and is concerned about the impact this may have on their career, we’ve agreed to publish this series of weekly excerpts under the author’s chosen pseudonym—Demosthenes.

MeriTalk has agreed not to make substantive changes to any of the chapters.

— Dan Verton, Executive Editor

FedRAMP

The Federal Risk and Authorization Management Program (FedRAMP) is not authorized in law. It is a program that was initiated by the thinking of Vivek Kundra in the 25 Point Plan 1 but actually began under Steve VanRoekel. The FedRAMP Memo 2 was signed in December 2011 and seems like a good idea on the face of it. The core ideas are:

- We want to increase the rate of cloud adoption by Federal agencies.

- The Certification and Accreditation (C&A) process is the same for these cloud services.

- We should perform that work once and reuse it everywhere.

These are good ideas, right? Nobody will disagree with any of this. The problem is that the implementation hasn’t exactly worked out the way it was intended.

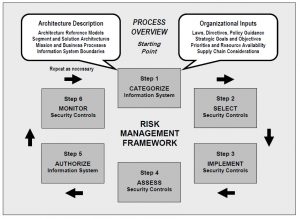

My big gripe with this is with the Joint Authorization Board (JAB). I’m going to take a jab at the Jab. Bear with me while I go through my proof that this is a stupid thing. FISMA (2002) told NIST to develop security standards and they create a whole bunch of special publications in the “800” series 3. Within that series, NIST 800-37 is a guide on how to assess risk.

What FedRAMP proposes is pretty much to substitute the CIO’s decision to issue an ATO with the decision of the JAB. Hey, I know the people on the JAB. Luke McCormack, David Shive and Terry Halvorsen are all good people. But the problem is that through FISMA, this decision is supposed to made by the CIO. It gets somewhat involved but it breaks down to essentially the business owner and the CIO having a discussion in Step 5 and then making a decision about whether to issue the “Authority To Operate” (ATO). This is a big deal because if we say that you have an ATO, that means that we have reviewed the controls of the capability against the risk tolerances of the organization, and we can accept the risk that is imposed by taking this new capability to the production environment. This is one of the biggest deals for a CIO.

When a cloud capability is hacked and spills a bunch of data, do you think that Congress will call the JAB in for a hearing? No. They will call in the CIO, and because of that, the CIO cannot outsource his or her consideration of the ATO.

The other part of this is that different agencies have different levels of risk tolerance and they change based on the sensitivity of the organization and the data that is used. Luke, Dave, and Terry are all good people, but they made their determination in a vacuum. They didn’t consider the factors on the ground here in my agency because they don’t know my agency.

The final rant in this argument is one from a purely digital services perspective. Here we have a process that we aren’t satisfied with. It takes too long. When you think of friction in a process, are we likely to reduce friction by inserting a new choke point? My argument is that the JAB is another decision point that necessarily slows the process.

My last criticism of FedRAMP is to put yourself in the shoes of an app developer. Before we do that we have to recognize that NIST 800-53 has about 250 separate and unique controls. They range from AC-3, least privilege account access to MP-6 media sanitization. In the app economy developers are going to focus on the meaningful interactions and just rely on the stack to provide what the stack does. But that means that they are going to build their applications to run on Amazon, Google, Azure, whatever. If I was a developer I would want my application to run on top of virtually any platform, hence the term, platform independence.

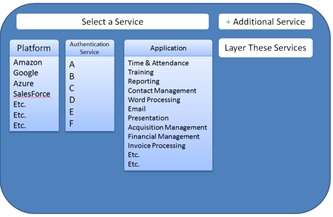

But when you want to apply this to FedRAMP and the 800-53 controls, you have to be smart. Let’s say I make an app that allows people to record their time and attendance. I construct my app to run on pretty much any environment. It would be great to get a FedRAMP certification because that will, hopefully, allow agency to get my solution up and running faster than it would be otherwise. BUT…

I need to figure out which stack I want to run through the process on. Do I run it on Amazon, Google, SalesForce, Microsoft, or about the thousand other players in this space? Wouldn’t it be better to say that of the 250 controls specified in 800-53, which ones apply to “MY” solution versus which ones are inherited from the platform? If I could do that they I would say MP-6, and all of the Media Protection controls don’t apply to my application and are instead inherited from whatever platform you choose to run my app on? AC-3 and most of the Access Control family will apply to my app and I will document how I handle each of those controls on the low, moderate, and high profile.

This issue is all about how we draw the boundaries around a system and an application. I would argue that we have to draw tight (smallest possible) boundaries. This would allow agencies to assemble platforms and applications and mix and match them to get a risk profile that is likely to be acceptable to their organization.

As such, I think there is a place for FedRAMP in the future. I don’t think that the current GSA team would appreciate this version of FedRAMP because it is so radically different from what they are offering agencies today.

My version of FedRAMP would be that it is a lock box that contains the methods for how the cloud offeror meets or doesn’t meet specific controls from NIST 800-53. My approach would not have a JAB or convey any kind of approval. Rather, it will have the objective description of the controls that have been assessed by an independent third-party assessment organization (3PAO).

My approach would be like a layer cake. The customer (agency) would go into FedRAMP to generate a list of controls from a myriad of different services. FedRAMP would be the authoritative source for the control documentation, but the data would be stored in tables which would allow people to assemble the controls in countless ways, each tailored to the products and services that the agency is already using to identify the most efficient path to deploying cloud services with a level of risk that is consistent with the tolerances of the agency.

My approach would be like a layer cake. The customer (agency) would go into FedRAMP to generate a list of controls from a myriad of different services. FedRAMP would be the authoritative source for the control documentation, but the data would be stored in tables which would allow people to assemble the controls in countless ways, each tailored to the products and services that the agency is already using to identify the most efficient path to deploying cloud services with a level of risk that is consistent with the tolerances of the agency.

1 You should be familiar with the 25 Point Plan to Reform Federal IT – https://www.dhs.gov/sites/default/files/publications/digital-strategy/25-point-implementation-plan-to-reform-federal-it.pdf

2 https://cio.gov/wp-content/uploads/2012/09/fedrampmemo.pdf

3 http://csrc.nist.gov/publications/PubsSPs.html

4 http://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-37r1.pdf

In This Series: